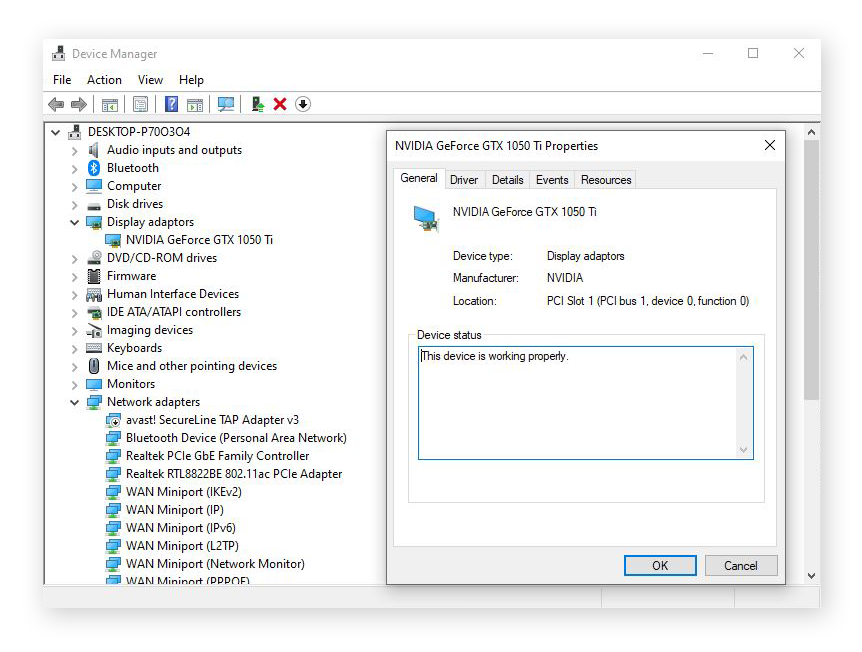

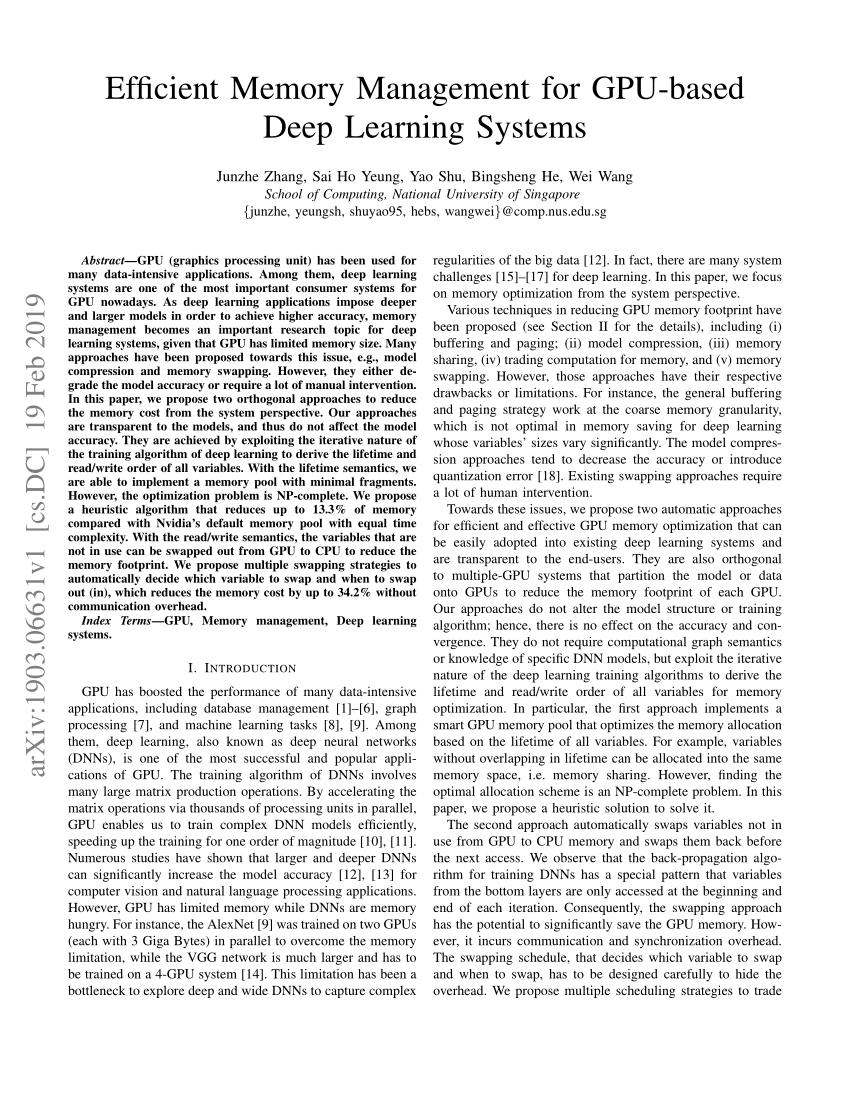

Harmony: Overcoming the Hurdles of GPU Memory Capacity to Train Massive DNN Models on Commodity Servers

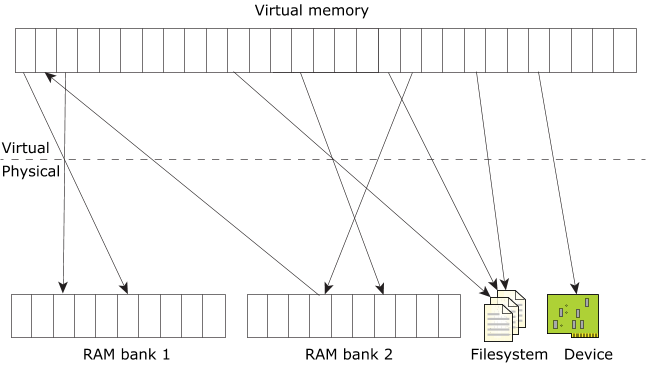

MemHC: An Optimized GPU Memory Management Framework for Accelerating Many-body Correlation | ACM Transactions on Architecture and Code Optimization

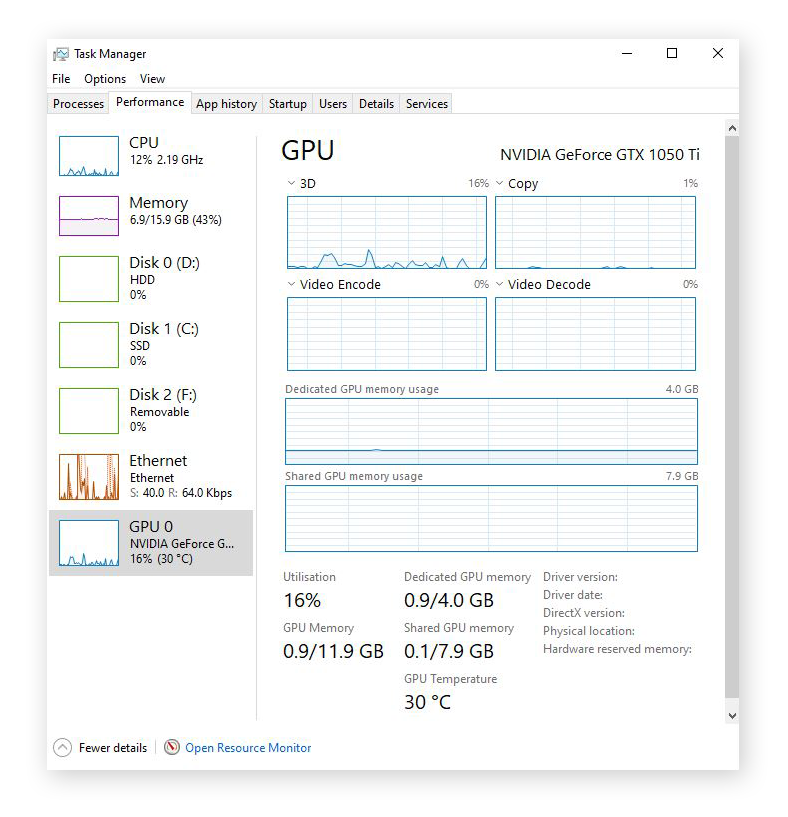

Optimizing very large neural network that is greater than size of GPU memory - Discussion Zone - Ask.Cyberinfrastructure

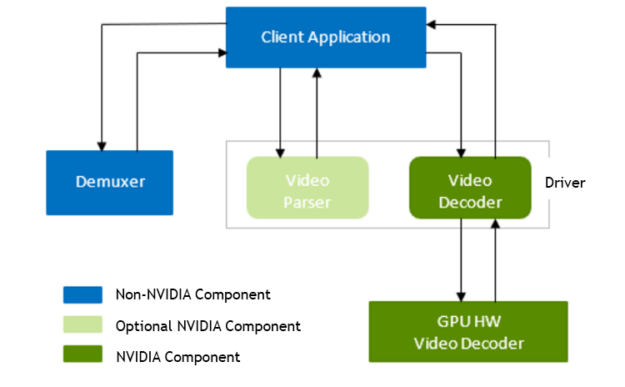

Optimizing Video Memory Usage with the NVDECODE API and NVIDIA Video Codec SDK | NVIDIA Technical Blog

Harmony: Overcoming the Hurdles of GPU Memory Capacity to Train Massive DNN Models on Commodity Servers

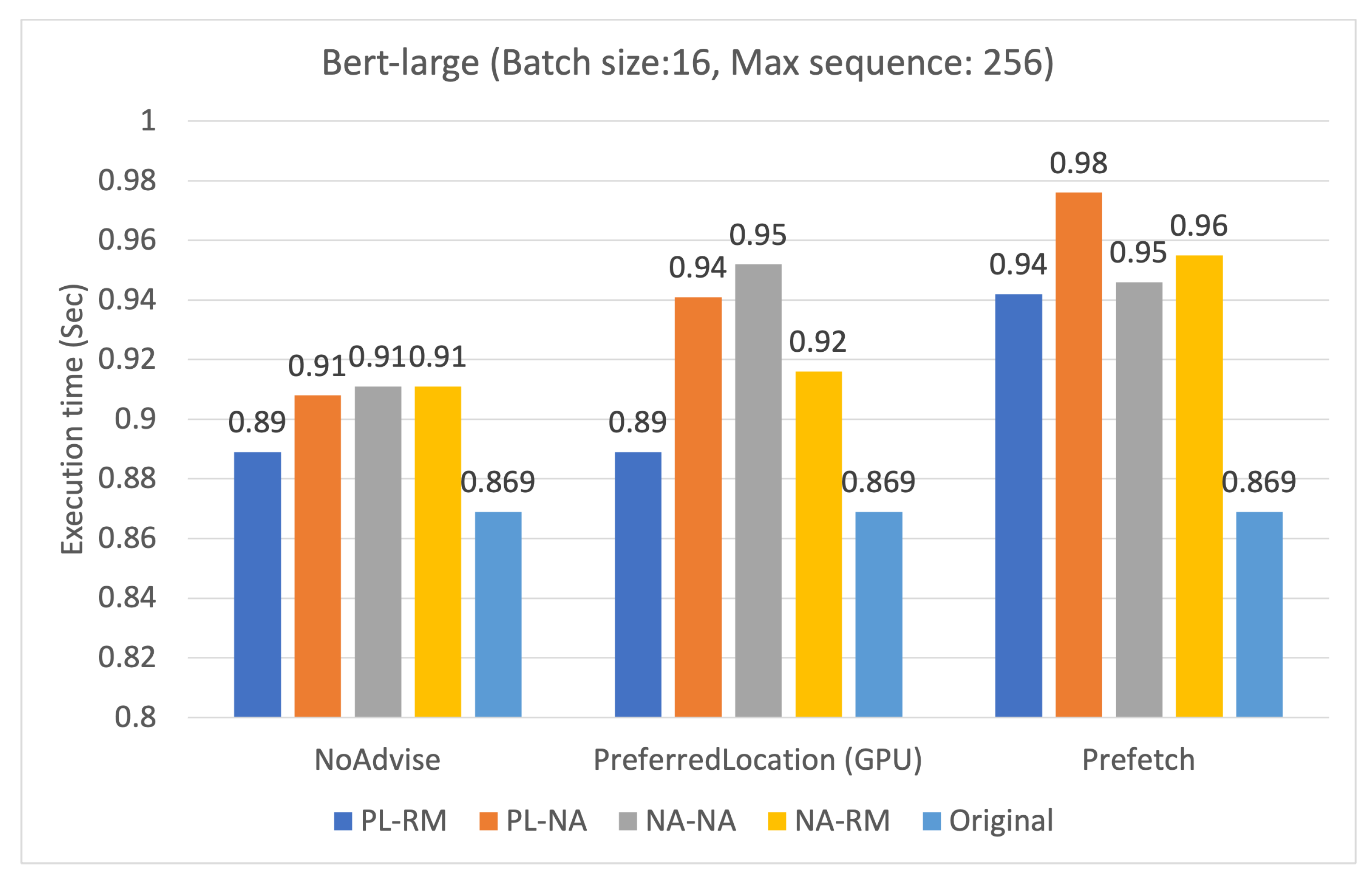

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training