GitHub - Desklop/optimized_tensorflow_wheels: Optimized versions TensorFlow and TensorFlow-GPU for specific CPUs and GPUs (for both old and new).

AMD Unveils New Power-Efficient, High-Performance Mobile Graphics for Premium and Thin-and-Light Laptops, and New Desktop Graphics Cards - Edge AI and Vision Alliance

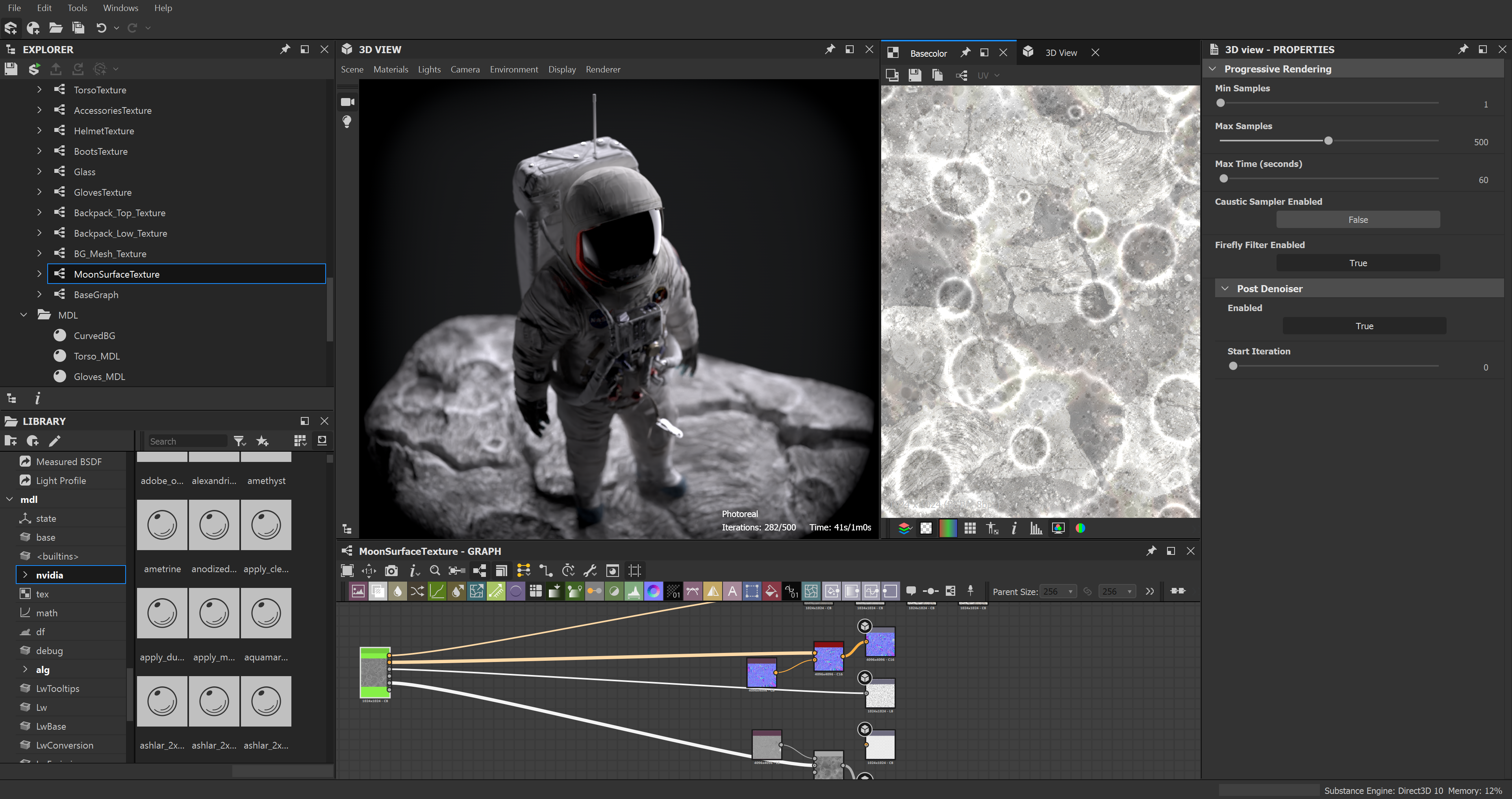

New Studio Driver Now Available, Optimizes Performance For Cinema 4D R21 and Other Top Creative Apps | GeForce News | NVIDIA

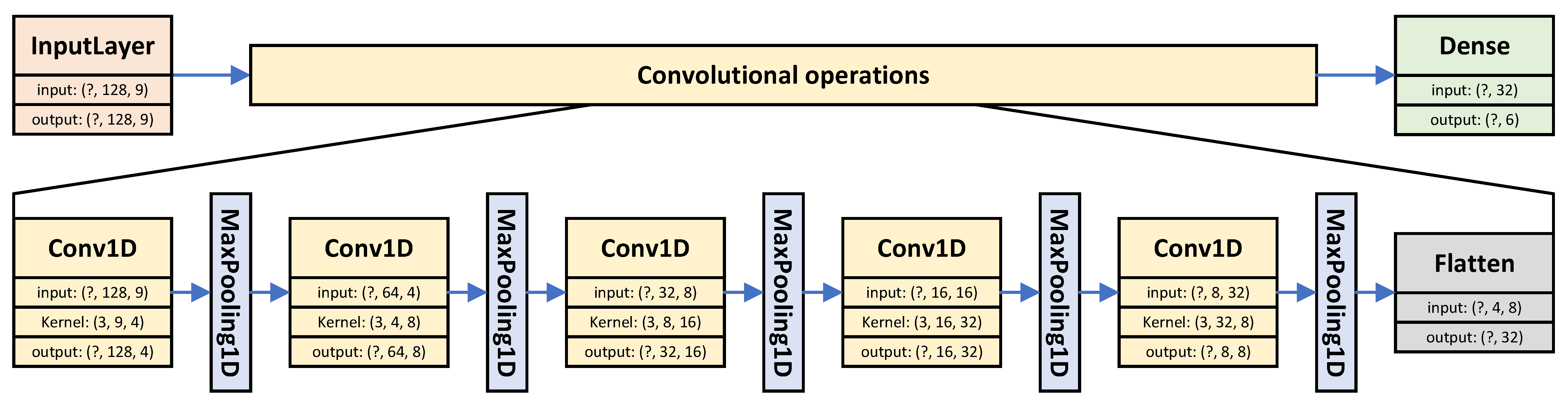

JLPEA | Free Full-Text | Big–Little Adaptive Neural Networks on Low-Power Near-Subthreshold Processors

![Updated for PixInsight 1.8.8-6] PixInsight, StarNet++ and CUDA - Gotta Go Fast - _darkSkies Astrophotography Updated for PixInsight 1.8.8-6] PixInsight, StarNet++ and CUDA - Gotta Go Fast - _darkSkies Astrophotography](https://darkskies.space/wp-content/uploads/2020/07/image-12.png)